TFLSTM-18

┏━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━┓

┃ ┃ Name ┃ Type ┃ Params ┃

┡━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━┩

│ 0 │ criterion │ WeightedMSELoss │ 0 │

│ 1 │ model │ TransformerLSTMModel │ 4.3 M │

│ 2 │ model.encoder_grn │ GatedResidualNetwork │ 199 K │

│ 3 │ model.decoder_grn │ GatedResidualNetwork │ 199 K │

│ 4 │ model.encoder │ LSTM │ 1.1 M │

│ 5 │ model.decoder │ LSTM │ 1.1 M │

│ 6 │ model.transformer_blocks │ ModuleList │ 1.8 M │

│ 7 │ model.output_head │ Linear │ 257 │

└───┴──────────────────────────┴──────────────────────┴────────┘

Trainable params: 4.3 M

Non-trainable params: 0

Total params: 4.3 M

Total estimated model params size (MB): 17

Same as TFLSTM-16, but without Bdot in the past, and adding back measured field to the past as normal. We also make the encoder/decoder GRN hidden dim the model dimension instead of the input dimension.o

Trained on GPUs 1-3 on ml004

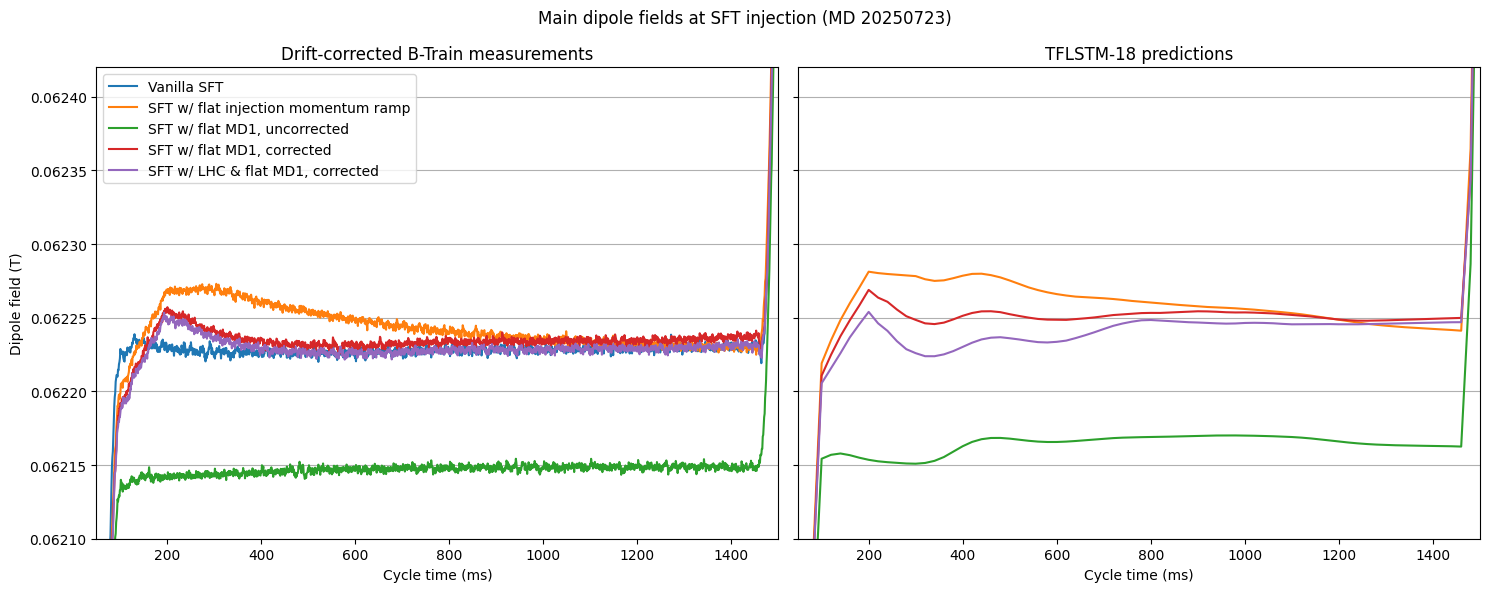

We evaluate it on the dataset from Dedicated MD 2025-07-23 and find that it can predict the flat bottom field within on drift-corrected data. I.e. the model learns real field.