TFLSTM-33

┏━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━┓

┃ ┃ Name ┃ Type ┃ Params ┃

┡━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━┩

│ 0 │ criterion │ WeightedMSELoss │ 0 │

│ 1 │ model │ TransformerLSTMModel │ 4.3 M │

│ 2 │ model.encoder_grn │ GatedResidualNetwork │ 199 K │

│ 3 │ model.decoder_grn │ GatedResidualNetwork │ 199 K │

│ 4 │ model.encoder │ LSTM │ 1.1 M │

│ 5 │ model.decoder │ LSTM │ 1.1 M │

│ 6 │ model.transformer_blocks │ ModuleList │ 1.8 M │

│ 7 │ model.output_head │ Linear │ 257 │

└───┴──────────────────────────┴──────────────────────┴────────┘

Trainable params: 4.3 M

Non-trainable params: 0

Total params: 4.3 M

Total estimated model params size (MB): 17

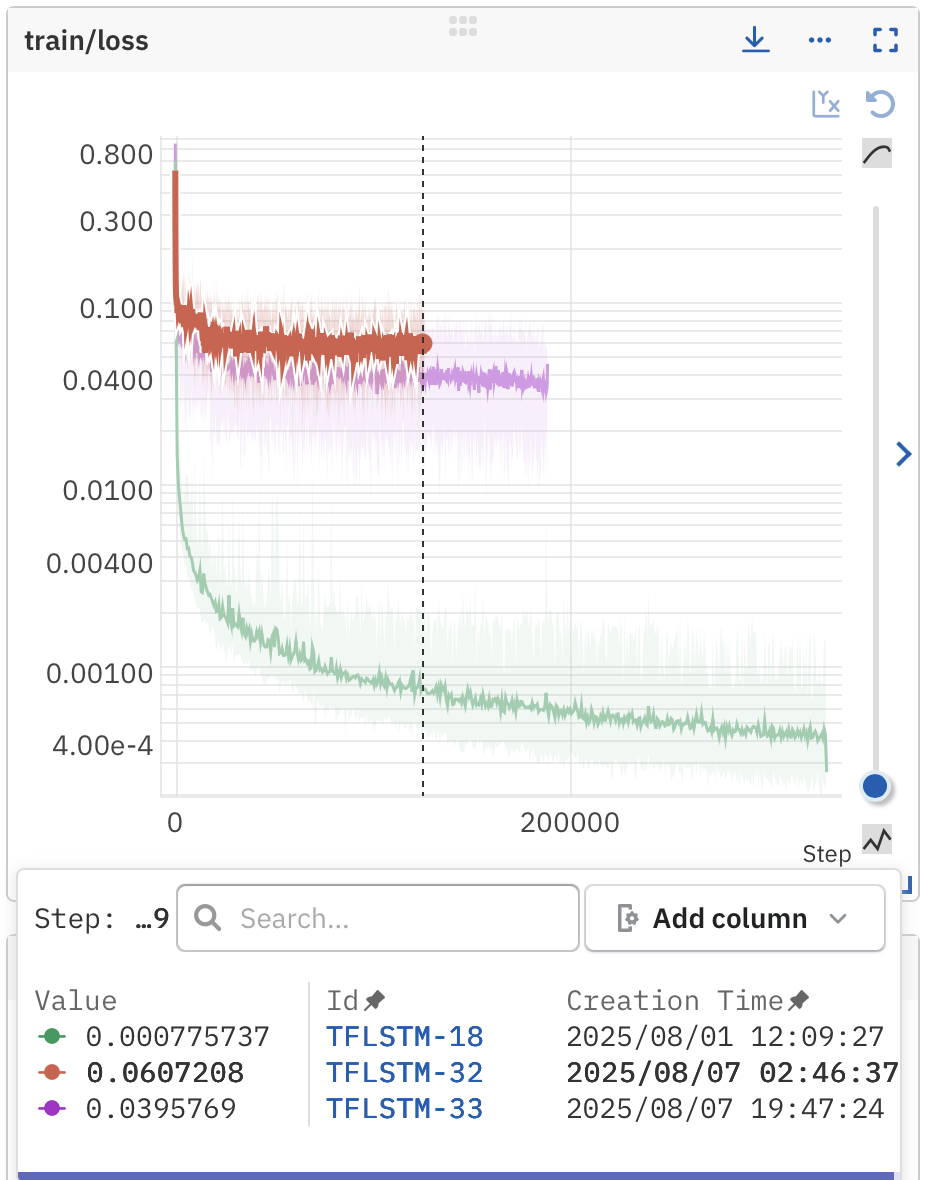

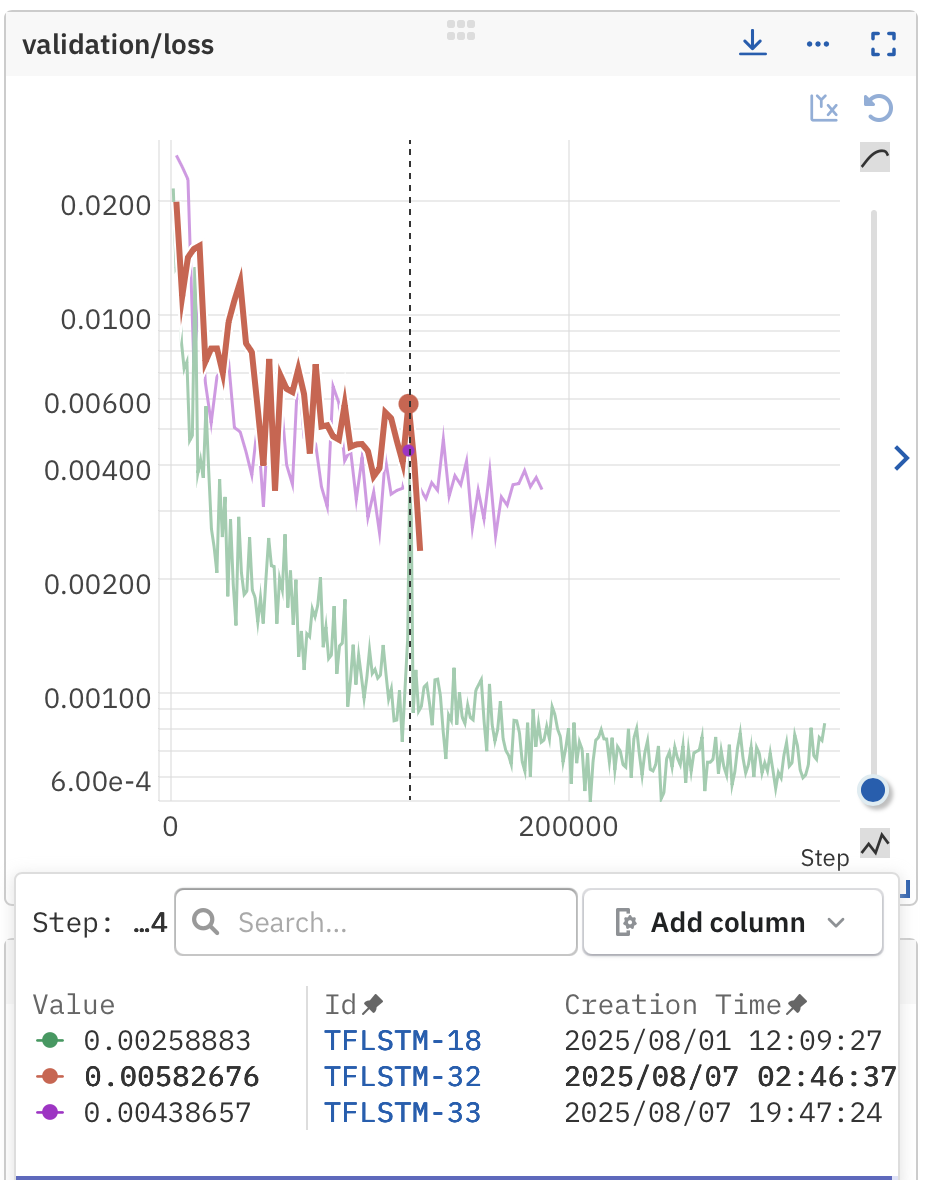

TransformerLSTM with same parameters as TFLSTM-32 but with masking of padded sequences.

We see a much poorer training loss evolution thanks to packing RNN sequences, but the validation loss is not as much affected. This is likely due to the model not learning the padded values, which could be much of the decoder input tensor with the large randomize_seq_len bounds (180 to 1020 real sample length).

Note that as compared to TFLSTM-18, this run is on only 1 GPU with the same (single GPU) batch size of 64.