~/cernbox/hysteresis/checkpoints/tft_transfer_MD20241119.ckpt ~/cernbox/hysteresis/checkpoints/tft_finetune_MD20241119.ckpt

Following TFT on simulation data without downsampling and Fitting models to simulated hysteresis data

Performing transfer learning on Dipole datasetswith the following layers trainable with the TFT:

attn_grn

attn_gate2

attn_norm2

output_layer

This can be achieved by not including the rest of the parameters in the model.parameters() generator.

The original TFT model size is 6.2M, but the trainable parameters is only 397k.

The learning rate schedule follows a OneCycleLR with , pct_start to 0.1, and div_factor to 10, so effectively starting from for 50 epochs.

When the training has converged, the rest of the model is unfrozen, once again with a OneCycleLR, but with a 5x higher max .

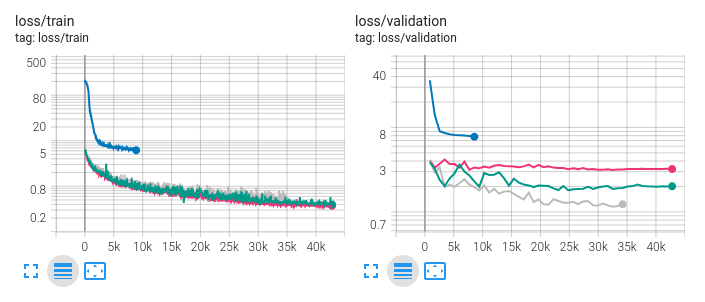

The training results are seen below:

Here the blue is transfer learning stage, and the purple, green and gray are fine tuning stage. The fine-tuning stages are with min_ctxt_seq_len respectively.

We see that the validation loss improves significantly for the fine-tuning stage with more context elements zeroed out.

Through fine-tuning we achieve a 1 magnitude improvement on the validation data over the transfer-learning stage.

Improvements with just transfer learning

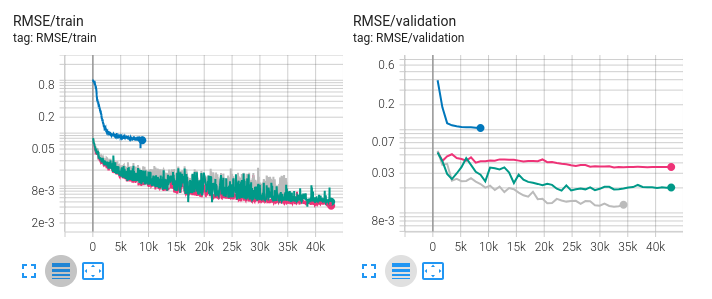

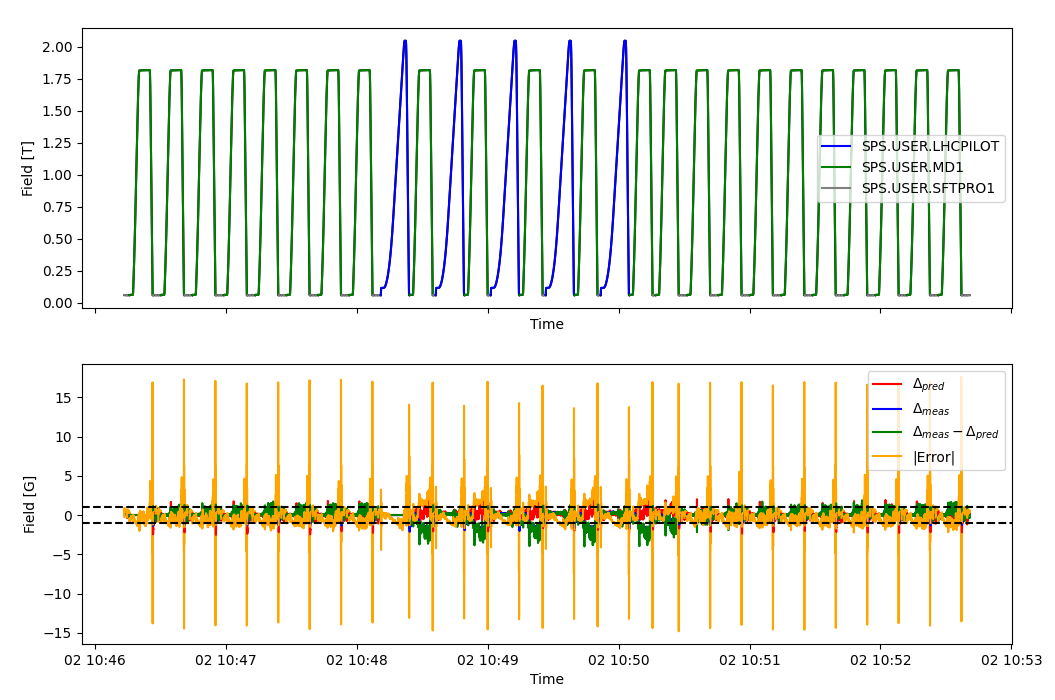

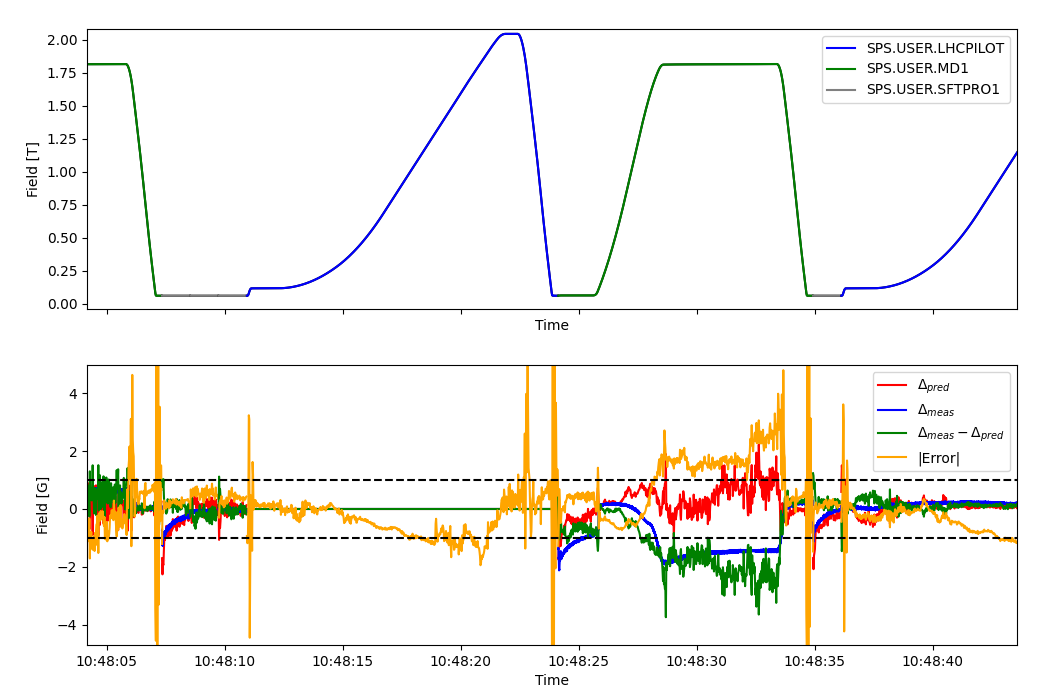

Surprisingly, just through transfer-learning stage we achieve a very good prediction already, often within 1 Gauss, with most artifacts coming from ripples in the measured (ground truth) data which are not present in predictions when predicting with programmed current.

Freezing scaler parameters

Whenever new parameters

New simulations

Through Pretraining on field simulations we reach the model PRETFTMBI-25, which is trained on the Jiles-Atherton simulated data, and then fine-tuned on Dipole datasets v4. We can compare a model only trained on B-Train data, and one which is from a pre-trained one.

| model | validation/MSE | validation/MAE | validation/RMSE | validation/SMAPE |

|---|---|---|---|---|

| TFTMBI-44 | 5.5521e-09 | 3.23289e-05 | 7.45124e-05 | 0.000289265 |

| TFTMBI-50 | 5.88868e-10 | 1.53007e-05 | 2.42666e-05 | 4.46198e-05 |

| TFTMBI-44 is trained on raw data, whereas TFTMBI-50 is fine-tuned from pre-trained model. |

Evaluated in ~/cernbox/hysteresis/dipole/notebooks/mbi-dataset-v4/tft-eval-autoregressive.ipynb

On raw data

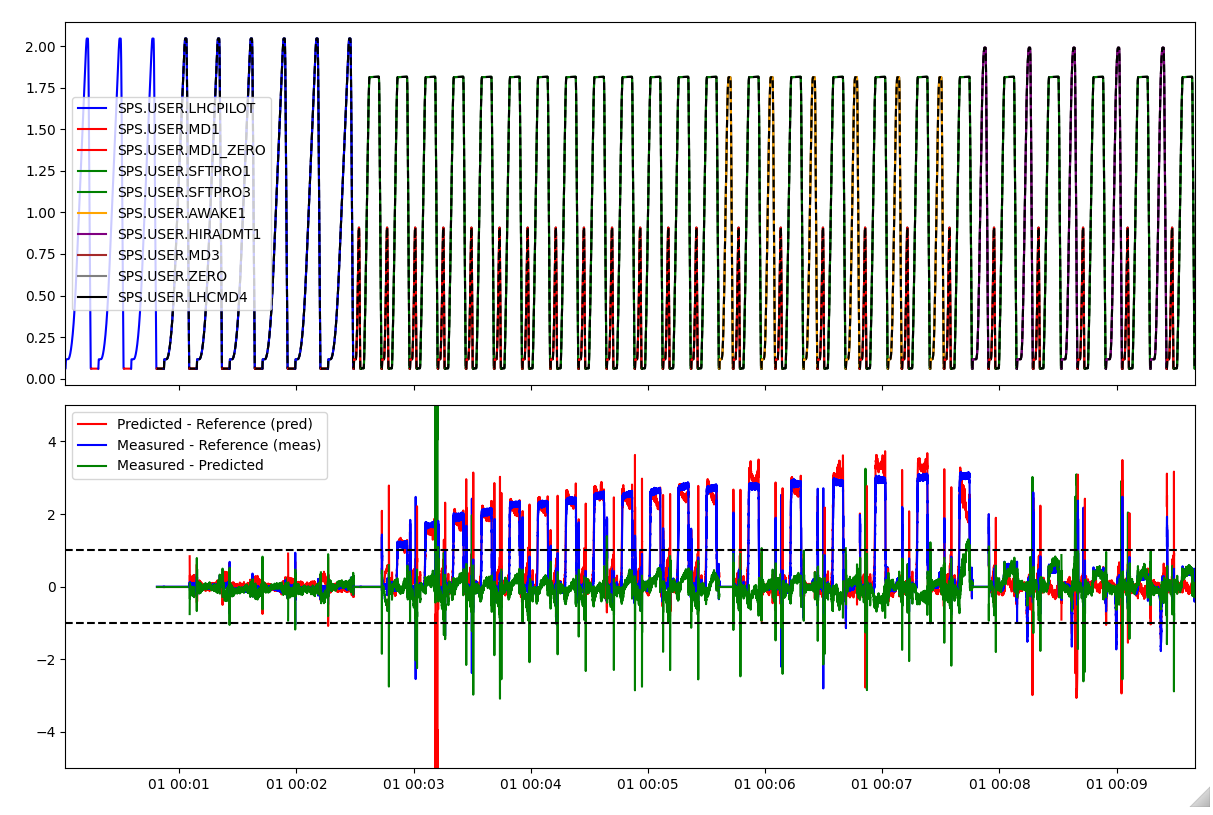

TFTMBI-44

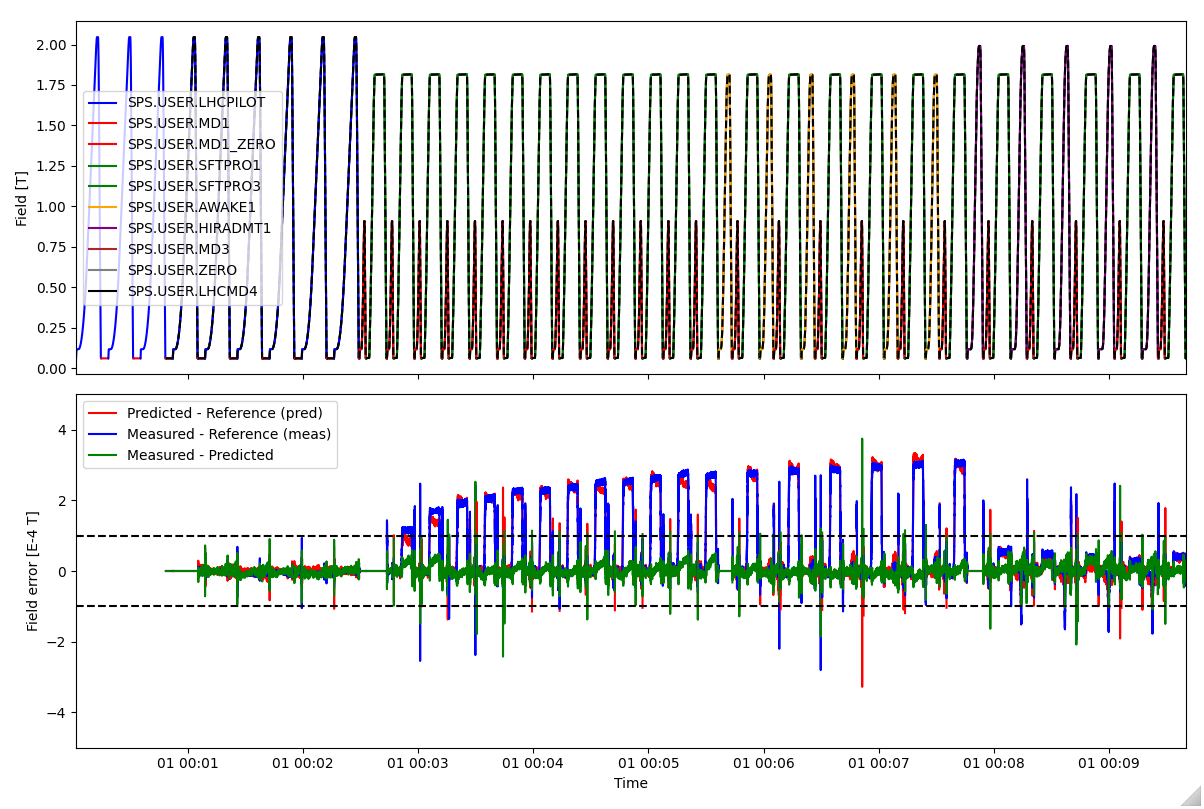

TFTMBI-50

- Try ensemble predictions to reduce prediction noise [due:: 2025-03-14]