LSTMs and other types of sequence-to-sequence models are black-box models without an explicit time axis, as opposed to Neural ODEs. There are a few ways to pass the irregular time information to the neural network. This is especially useful when using Adaptive downsampling.

- Implement irregular time indices in transformertf [priority:: high] [completion:: 2024-08-06]

This needs to be implemented natively in transformertf for distributed training.

1. Positional encodings

Transformer-style architectures rely on positional encodings to inform the neural network of the order of the elements in a sequence, often using cos+sin transformations on . Using non-sequential integers to pass to the cos+sin transform it is possible to to convey this information to transformers.

2. Relative time indices

Using the full available time axis, we can compute , i.e. the time between each timestamp. This can be passed as an additional feature/covariate to the seq2seq model.

Important

The feature also has to be normalized. Care has to be taken with if there are outliers in , in which case a

log1ptransform might be appropriate prior to normalization, to avoid washing out fine-grained time features.

3. Absolute time indices

Using the time axis , for each sample the time axis will be , and subtract the whole time axis by , to make each sample time axis start with . Finally the time axis needs to be divided by a normalizer to have all time values in .

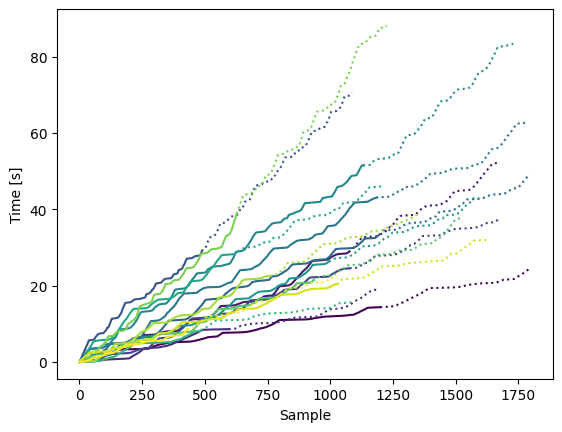

With random sequence length enabled for the encoder, the we can see a spread of time evolutions. Dotted is the decoder input (i.e. prediction axis).

The normalizer can be found by iterating over the entire dataset with a window generator