Overview

MDs during Summer 2023 suggested that knowledge of the current for the past minutes is not sufficient to predict hysteresis, due to the remanent field changing between MD days.

I instead to suggest exploring using the (Phy)LSTM as an encoder-decoder model, with the (Phy)LSTM being the decoder, and some network encoding an initial physical state of the magnet.

During inference, we simply start at a known physical state, with a corresponding known latent state, and go forward in time. This can be achieved by simply using a pre-cycle, where the initial state is known.

During training, we train as with any encoder-decoder model. Just that for the encoder, we have and , while for the decoder, we only have .

Implementation

The implementation can be split into the following tasks

Encoder

- Implement Transformer-based encoder for PhyLSTM state encoding [priority:: medium] [completion:: 2024-08-21]

- Investigate if hidden and cell states for LSTMs should be repeated to match number of LSTM layers or networks should output one set of states per layer [priority:: medium] [completion:: 2024-08-21]

- Investigate strategies to train state encoder in a self-supervised manner, to train on abundant magnetic field data [priority:: medium] [completion:: 2024-08-21]

The hidden and cells states are calculated separately, and are then repeated to match number of layers.

We cannot train self-supervised.

Decoder

- Implement PhyLSTM with state encoder in transformertf. [priority:: medium] [completion:: 2024-08-21]

- Investigate additional time-dependent component for rate-dependent hysteresis for Bouc-Wen model. [priority:: medium] [completion:: 2024-05-24]

PETE has been implemented with transformertf !21.

The additional rate-dependent component can be added using Eddy current decay in accelerator magnets differential equation.

Using ground truth of field derivative

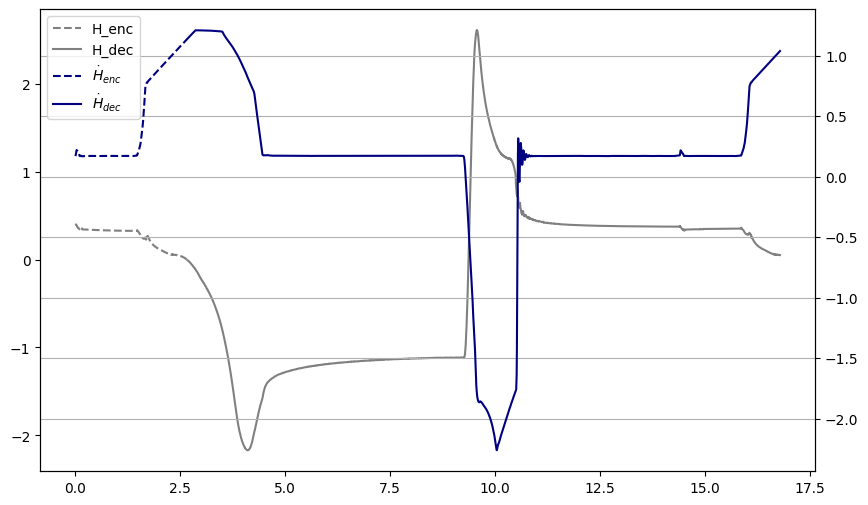

The field derivative is used for the second and third loss term that computes and must have the equivalent of the piecewise linear function derivative removed, otherwise the derivative will be incorrect.

Additionally when using adaptive downsampling, care must be taken to use non-uniform time steps, since the library implementation of torch.gradient assumes uniform (or unit) spacing.