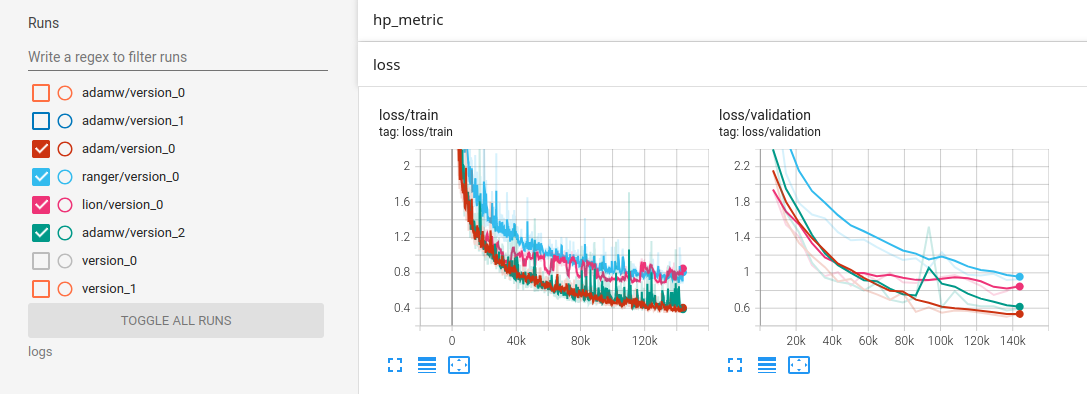

Adam, AdamW, Ranger, and Lion were used to train a TFT on 24h of simulated data with absolute time features, on 4 GPUs.

| Optimizer | LR | Weight decay |

|---|---|---|

| Adam | 5e-4 | |

| AdamW | 5e-4 | 1e-2 |

| Ranger | 5e-4 | |

| Lion | 8e-5 | 1e-2 |

Based on this experiment, there seems to be no need to use the Ranger optimizer, nor Lion, and rather to stick to Adam or AdamW.