Differentiable Preisach Model with Neural Networks

[@rousselDifferentiablePreisachModeling2022] has several shortcomings when it comes to adaptation to large data volumes

- The optimization routine is single batch

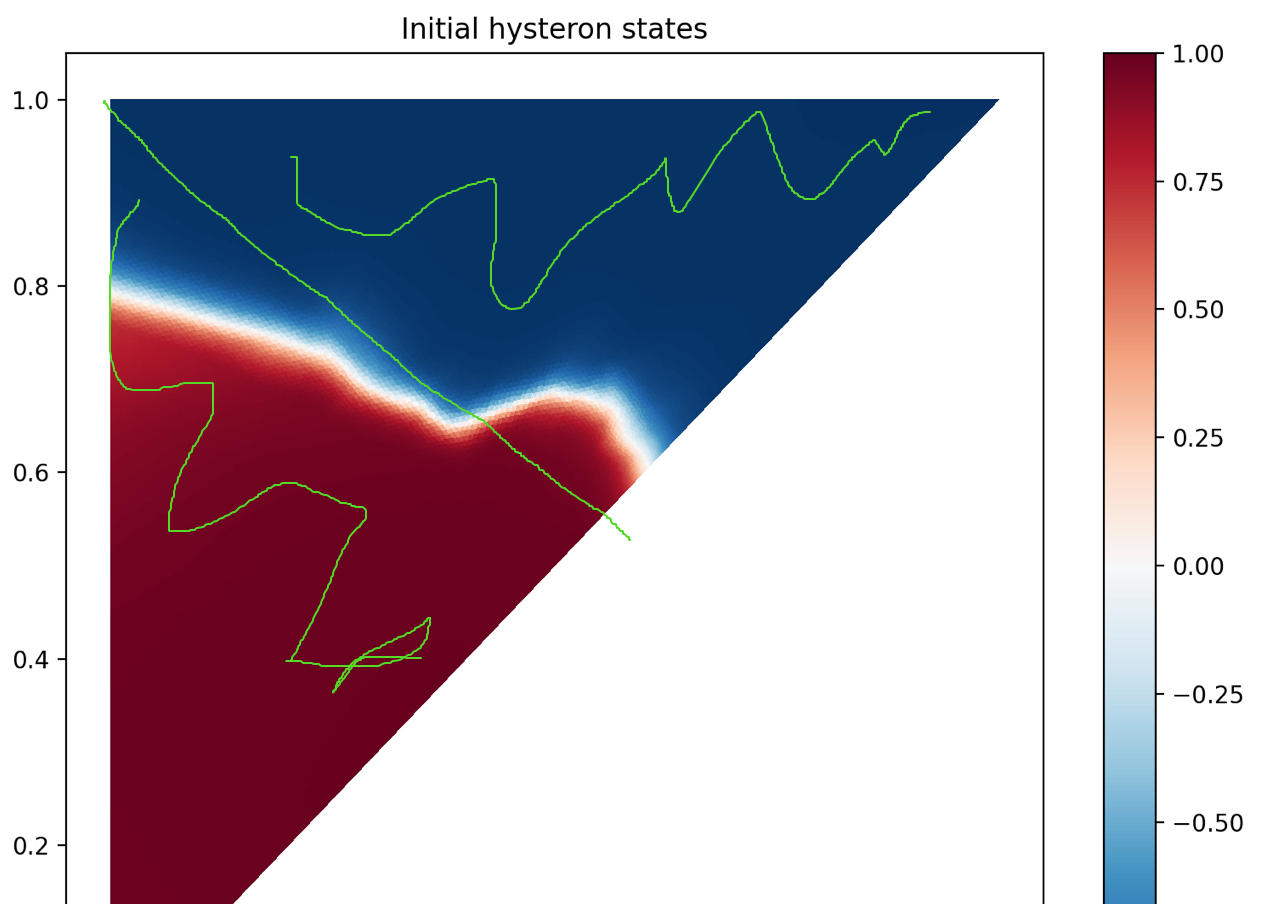

- The initial magnetization is fixed (fully demagnetized, all )

- The mesh is fixed in size, i.e. number of hysterons, and weights are learned with gradient descent (at the same time as scaling)

- Each mesh point is tied to an initial state, i.e. there is a 1-1 mapping between initial state and mesh point

- The scaling of magnetization is critical, since , and predictions of are normalized. Then when we unscale to B, the scales are implicit.

- The scaling takes care of the primarily linear relationship

Proposal

- Learn relationship for using a fully connected layer

- Use a mesh that can be sampled at inference time with arbitrary number of points

- Use an encoder to encode an initial state distribution

- Fully connected layer allows arbitrary number of mesh points

- At training-time the pre-sampled mesh can be randomly perturbed per sample to improve robustness of the MLP

- Allows training in minibatch since initial states are generated by encoder

- Still limited in mesh points for the activation of states.

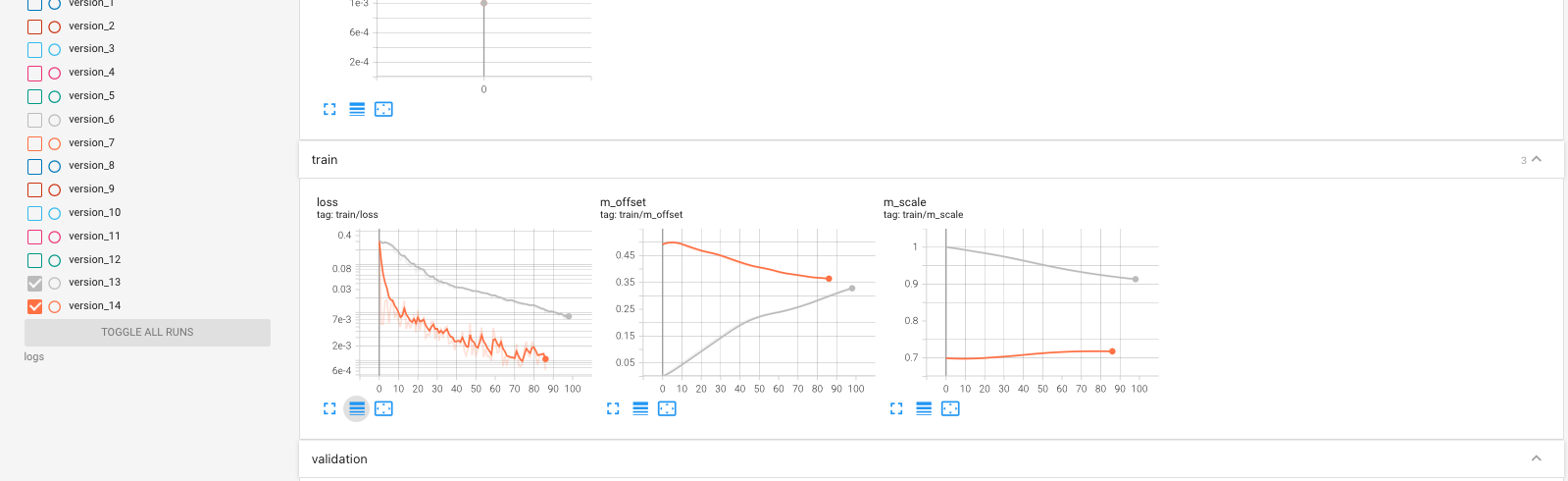

We see the improvement of using analytical scale fitting at the beginning of training (gray without, orange with). Whereas it seems the offset converges to a similar value, the loss decreases much faster

We want as